Machine Learning with Green AI: Using Training Strategies, OMA-ML save up 70% of its trainings energy while keeping its effectiveness.

Paper published at the 18th Conference on Computer Science and Intelligence Systems 2023

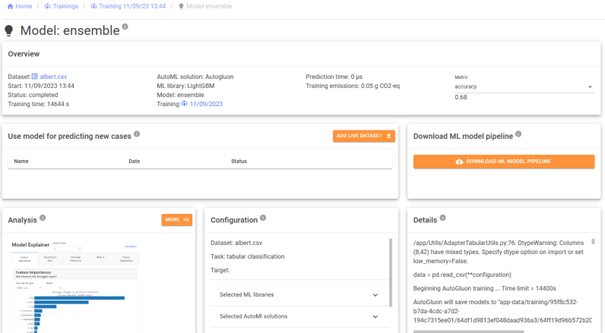

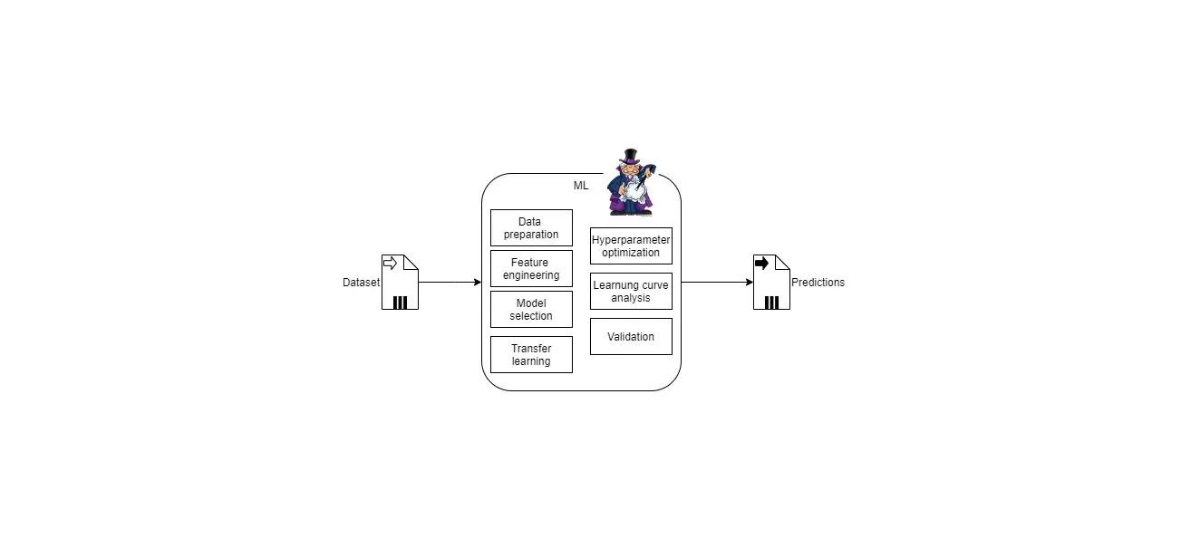

OMA-ML is a Meta Automated Machine Learning Platform (Meta AutoML) allowing AI experts as well as domain experts (e.g. professionals from the field of medicine) to quickly generate Machine Learning (ML) models. OMA-ML accomplishes this by leveraging the power of Automated Machine Learning (AutoML) solutions (e.g. AutoKeras). AutoML solutions automatically perform the data science workflow (e.g. CRIPS-DM) normally performed by a AI expert. OMA-ML executes all available AutoML solutions, and they search in parallel for their best solution, afterwards OMA-ML collects the models and generate executable program code.

The users can then use their preferred ML model within OMA-ML for further prediction or download the model for local execution. While OMA-ML makes the access to ML models convenient, it also has an energy issue! Generating several ML models costs a great amount of energy. In most cases, the users will only use the most efficient (highest prediction performance) model. Leaving the remaining models never to be used and making the OMA-ML “red AI” (wasting a lot of energy).

This research aims to address this by adopting Training Strategies for Meta AutoML. Training Strategies are approaches aimed at optimizing the training process, by either improving its efficiency or effectiveness. Using the Top-3 Strategy, OMA-ML could save up to 70% of its energy needs while keeping an identical model effectiveness.

This is accomplished by performing an initial pre-training using a subset of the total training time and training data. The AutoML models are then evaluated for their effectiveness, and only the best 3 AutoML solutions begin a new training using the full training data and remaining time.

This research has been published and presented at the 18th Conference on Computer Science and Intelligence Systems 2023:

Alexander Zender, Bernhard G. Humm, Tim Pachmann: Improving the Efficiency of Meta AutoML via Rule-based Training Strategies. In Maria Ganzha, Leszek Maciaszek, Marcin Paprzycki, Dominik Slezak (Eds.): Proceedings of the 18th Conference on Computer Science and Intelligence Systems. Annals of Computer Science and Information Systems vol. 35, pp. 235–246. IEEE 2023. ISBN 978-83-967447-8-4

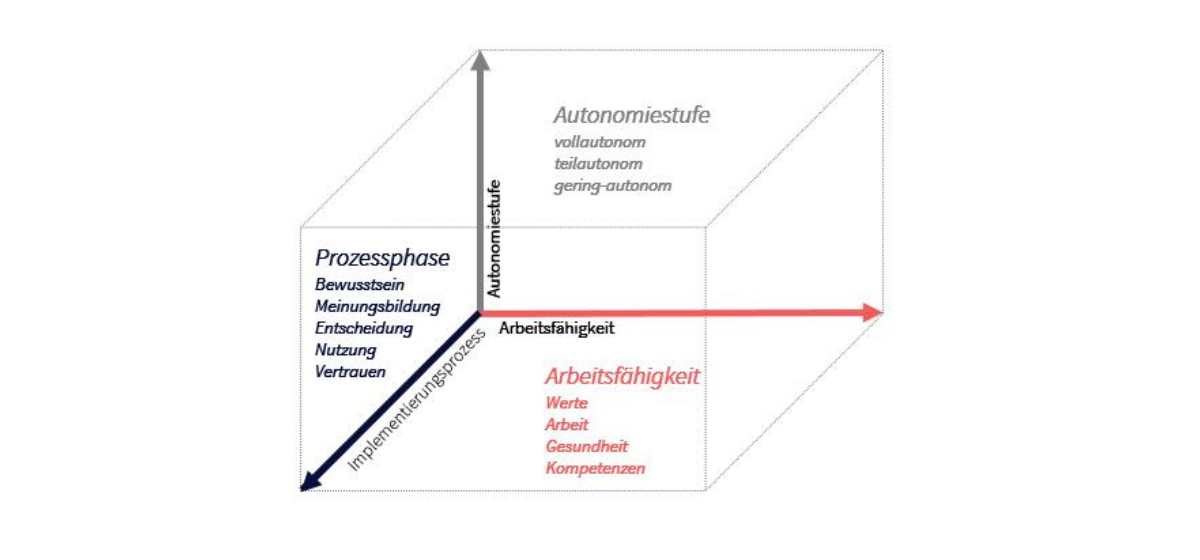

The paper presents a survey of 14 AutoML training strategies that can be applied to Meta AutoML. The survey categorizes these strategies by their broader goal, their advantage and Meta AutoML adaptability. The paper also introduces the concept of rule-based training strategies and a proof-of-concept implementation in the Meta AutoML platform OMA-ML. This concept is based on the blackboard architecture and uses a rule-based reasoner system to apply training strategies. Applying the training strategy “top-3” can save up to 70% of energy, while maintaining a similar ML model performance.